Adventurous monkeys: choosing to explore the unknown

Communication between the amygdala and the ventral striatum regulate decision-making behavior by determining whether new options outweigh a known option with a guaranteed reward.

Author: Ella Cary

Download: [ PDF ]

Neuroanatomy

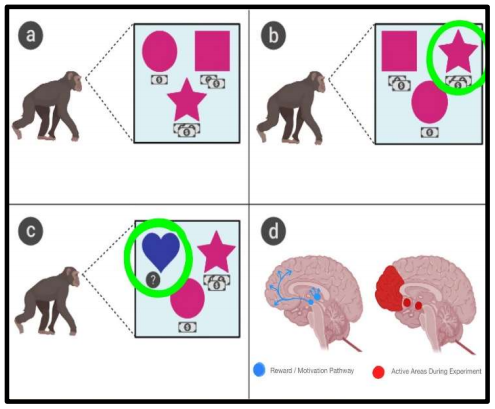

This article describes new research regarding the explore-exploit decision-making process of rhesus monkeys. While it has been previously documented that animal subjects will learn via motivational circuits through reward repetition,1 this research dives into the neural circuits involved with choosing to explore a new option over selecting a choice with a known and guaranteed reward.

The amygdala is an almond-shaped structure in the temporal lobe of the brain. It is known to play a role in processing emotions and motivation.2,3 The ventral striatum is a structure of the brain that is heavily linked to how the brain processes reward.4 In a paper published in Neuron in 2019, Vincent Costa, Andrew Mitz, and Bruno Averbeck investigate whether or not motivational circuits in the brain of rhesus monkeys support activity related to decisions to explore rather than exploit a path with a guaranteed reward. It was found that motivational circuits were active and important in guiding the explore-exploit decisions of the monkeys. These findings further pose the need for research to be conducted in order to determine specific interactions between brain structures of the reward systems that regulate this exploratory decision making.

The explore-exploit dilemma refers to deciding when to give up a known immediate reward in order to explore a new option.5 Essentially, is the potential value of the new option high enough that it is worth disregarding the guaranteed value of the known choice? This dilemma presents a problem in reinforcement learning where a subject is given consistent rewards upon completing tasks, thus conditioning them to perform the task on a regular basis.6 Neural circuits associated with motivation which include structures that receive dopamine input like the amygdala and ventral striatum facilitate learning based on choices and outcomes, though it is unknown whether these same structures and circuits support in-depth processing that allows the subject to decide whether or not to explore.7 Cortical activity is typically higher in humans and monkeys (compared to other mammals) when foregoing reinforced learning behaviors in order to explore novel alternative options.8 This suggests that dopamine regulates the explore/exploit choices and therefore structures of the brain such as the ventral striatum and amygdala that receive dopaminergic input must also play a role in explore/exploit choices.9

In this study, three rhesus monkeys were taught to play a task-oriented game in which three options were presented that had different reward values. The monkeys were given time to learn choice-outcome relationships by selecting each option. The same set of visual choice options was presented to the monkeys repeatedly for a minimum of ten times. The time given to explore the three options was limited in that one option was randomly replaced with a new novel image, forming a new set of options that were then repeated for several trials. Whenever a novel image was first introduced, the monkey had to determine its value by choosing to explore it over selecting one of the other two options where the outcome was already known. This process continued and a new novel image was introduced a total of 32 times. During each trial, the neural activity in brain structures was recorded while focusing on those that receive dopaminergic input like the amygdala and ventral striatum. Additionally, choice reaction times were also recorded.10

Overall, when the novel images were first introduced, the monkeys showed a preference for exploring it rather than exploiting the other two options with known rewards. After the initial exploration, however, they transitioned to choosing the best alternative option. The novel options were given lower values than the best alternative option, so the monkeys choice to forgo the novel option after initial exploration and instead select whichever of the remaining two options had the higher value demonstrates high levels of processing. As the value of the best alternative option increased, the selection rate of the introduced novel option decreased, indicating that at some point, exploration will be forgone in order to exploit a path with a highvalue reward. Analysis of choice reaction times (RT’s) show that when a novel image was introduced as a choice, the choice reaction times were longer when the monkeys ended up selecting the novel option over the best alternative option.10

Activity in 329 total amygdalar neurons and 281 total ventral striatum neurons were recorded. Overall, baseline firing rates were higher in amygdalar neurons compared to neurons of the ventral striatum, and spike-width durations were shorter for ventral striatum neurons. When the monkeys learned the value of their choice there was more obvious activity demonstrating immediate reward value in the neurons of the ventral striatum compared to the amygdala. When a novel option was first introduced, the neuron activity remained the same whether or not it turned out to be high, medium, or low value. As learning progressed, the firing rate increased when the monkey selected novel options with high-value rewards and decreased when a lowvalue novel option was selected. Overall, brain activity was observed in high concentrations in the prefrontal cortex, amygdala, and ventral striatum when the monkeys chose to explore the novel options. These areas all correlate with those that receive dopamine input along the motivation and reward neural circuit.

This study demonstrated the similarities in brain activity during reward-motivated decision making as well as explorative periods where a reward was foregone. It was shown that the ventral striatum and amygdala both show activity during these decision periods though not at the same time or in the same way. In general, brain activity during explorative moments mimics the path of the known dopaminergic mesolimbic circuit that is key in the reward and motivation circuits of both monkeys and humans. While this level of complex processing and choice-making most likely is a result of several neural circuits at work it is known that projections between the amygdala and ventral striatum are known to stimulate reward-seeking behaviors as well as positive reinforcements and have an effect on dopamine.11 This research demonstrates a further need for the exploration into which specific connections and circuits between the amygdala and ventral striatum regulate exploratory decision making. Additionally, activity in specific brain structures and cell groups could be recorded in order to further understand the basis behind the advanced choice strategies used by the monkeys to further navigate the explore vs. exploit trade-offs.

[+] References

Tabor, K., Black, D., Porrino, L., & Hurley, R. (2012). Neuroanatomy of Dopamine: Reward and Addiction. The Journal of Neuropsychiatry and Clinical Neurosciences, 24(1), 1-4. doi:10.1176/appi.neuropsych.24.1.1

Chesworth, R., & Corbit, L. (2017). The Contribution of the Amygdala to Reward-Related Learning and Extinction. The Amygdala - Where Emotions Shape Perception, Learning and Memories. doi:10.5772/67831

LeDoux, J. (2007). The amygdala. Current Biology, 17(20), 868-874. doi:10.1016/j.cub.2007.08.005

Rasia-Filho, A. A., Londero, R. G., & Achaval, M. (2000). Functional activities of the amygdala: an overview. Journal of Psychiatry & Neuroscience : JPN, 25(1), 14–23.

Arias-Carrión, O., Stamelou, M., Murillo-Rodríguez, E., Menéndez-González, M., & Pöppel, E. (2010). Dopaminergic reward system: a short integrative review. International Archives of Medicine, 3, 24. doi:10.1186/1755-7682-3-24

Newton, P. (2009, April 26). What is Dopamine? Retrieved April 10, 2020, from https://www.psychologytoday.com/us/blog/mouse-man/200904/what-is-dopamine

Kidd, C., and Hayden, B.Y. (2015). The psychology and neuroscience of curiosity. Neuron 88, 449–460. doi:10.1016/j.neuron.2015.09.010

Costa, V. D., Dal Monte, O., Lucas, D. R., Murray, E. A., & Averbeck, B. B. (2016). Amygdala and Ventral Striatum Make Distinct Contributions to Reinforcement Learning. Neuron, 92(2), 505–517. doi:10.1016/j.neuron.2016.09.025

Kakade, S., and Dayan, P. (2002). Dopamine: generalization and bonuses. Neural Network. 15, 549–559.

Costa, V., Mitz, A., & Averbeck, B. (2019). Subcortical Substrates of Explore-Exploit Decisions in Primates. Neuron, 103, 533-545. doi:10.1016/j.neuron.2019.05.017

Namburi, P., Beyeler, A., Yorozu, S., Calhoon, G.G., Halbert, S.A., Wichmann, R., Holden, S.S., Mertens, K.L., Anahtar, M., Felix-Ortiz, A.C., et al. (2015). A circuit mechanism for differentiating positive and negative associations. Nature 520, 675–678. doi:10.1038/nature14366

[+] Other Work By Ella Cary

Neuroinflammation Following Myocardial Infarction Supports Heart-Brain Axis Theory

Neurophysiology

Activation of neuroinflammatory cells in mouse brains following occlusion of the coronary artery show neurotoxic effects of cardiac ischemic injury.